A Focus on Focus

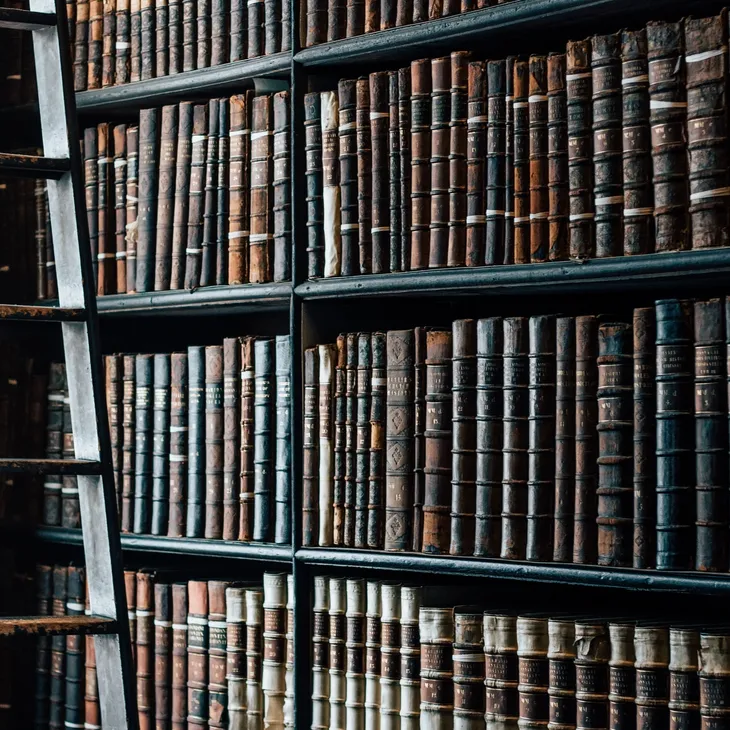

Unlike many participants in the natural language processing space, Isaeus will not attempt to reduce our entire, incomprehensibly complex world to an ostensibly simple set of 1s and 0s. Rather, we limit our models' knowledge to the legal milieu; while immense complexity is inherent in all LLMs, eschewing disparate domains extracts as much noise as possible. We hypothesize this epistemological reduction will engender a more specialized agent better able to navigate the legal world's semantic minutiae and avoid the hallucinations that plague other, general LLMs. The transformer architecture underpinning LLMs is novel—it originated in a 2017 Google paper—and researchers worldwide, including us, learn more each day. Those who endeavor to merely avoid failure inevitably find it. Thankfully, Isaeus harbors grander ambitions irreducible to a success/failure dichotomy: if our hypothesis holds, our models will secure their place in the vanguard of domain-specific LLMs. Regardless of the outcome, we are happy to help advance this nascent field's epistemological frontier.